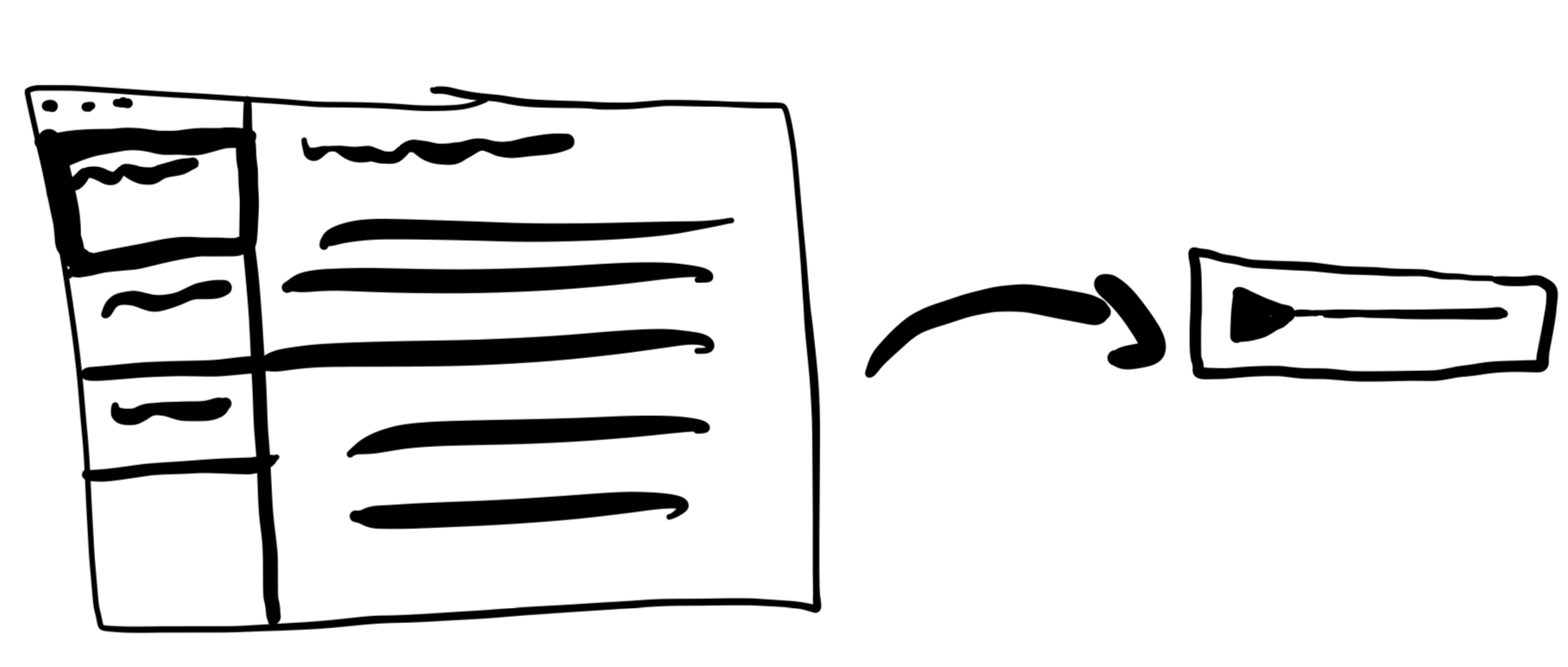

Transform Newsletter Chaos into Your Personal Podcast

Break free from inbox overwhelm. Get the insights you love, delivered as a weekly AI-powered podcast episode – right to your WhatsApp.

How It Works

Tell Us What You Love

Connect your favorite newsletters to Lettercast. We'll handle the inbox management.

We Curate, You Listen

Every week, our AI creates a personalized podcast episode highlighting the most relevant insights from your subscriptions.

Wherever You Are

Get your personalized audio digest and text summary via WhatsApp. Listen during your commute, workout, or morning coffee.

See It In Action

12 boring AI opportunities quietly making millions rn

AI pilot reshapes global education

AGI Will Make You Irrelevant (How To Future-Proof Yourself)

12 boring AI opportunities quietly making millions rn

my notes from conversations with 30+ founders

Hey there, solopreneur! I've been sitting on something big.

For the past 4 weeks, I've been deep in the AI startup trenches, having intense conversations with over 40 founders, content creators, and entrepreneurs who are actively building right now.

My Cyprus balcony has become a virtual war room of sorts. Between Mediterranean sunsets, I've been mapping out patterns, discovering playbooks, and uncovering opportunities that nobody seems to be talking about.

Here's the thing that keeps hitting me:

While everyone's recycling the same "AI will change everything" headlines on Twitter, the real opportunities are hiding in plain sight. They're not sexy. They're not trending. But they're making real money right now.

TLDR: I've uncovered 12 distinct playbooks that are working in the AI space right now, and I'm about to share every single detail with you - no gatekeeping, no fluff.

Let me break down exactly what I'm seeing in the market, playbook by playbook...

The AI Consumer App Playbook

Here's something fascinating I noticed in my founder calls:

The biggest wins aren't coming from building new things - they're coming from reimagining what already works.

The formula is surprisingly straightforward:

- Find a winning app from the last 5-10 years

- Look for any mechanical data entry or manual output reading

- Add AI to eliminate that friction

- Use short-form video (especially TikTok) as your viral mechanism

Want to know something wild?

One founder found massive success simply by tackling the calorie-tracking problem. Instead of manual logging, they built an app that estimates calories from photos.

Is it perfect? No. But it's "good enough" for most users, and it's exploding.

The key isn't perfection - it's removing friction.

Decrease Friction

Most AI tools are built by technical people who love to tinker with systems. But here's the problem - most users are completely the opposite.

The playbook I'm seeing work:

- Find tools people use DESPITE hating them (that's your gold mine)

- Rebuild that tool but remove 90% of the options

- If you can completely remove prompting, even better

- Focus on ONE core function that delivers value

I've experienced this firsthand - most automation tools still feel like they're built for engineers. The opportunity to go simple here is absolutely insane.

Quick reality check: If you need users to learn prompting, you've already lost 95% of your potential market.

Check social media comments on popular apps (especially on X), App Store reviews, and builder Discord channels to spot what frustrates users.

If you have an audience, running a quick survey works great too.

Vertical Domination

This one's counterintuitive, but stick with me:

Seeing tons of AI products in a niche? That's actually great news - it means there's huge demand and traffic.

A great example: AI copywriting tools. There's thousands of them.

But how many are specifically built for screenplay writers? How about personal brand newsletters?

The strategy breaks down like this:

- Look for a booming product

- Niche down until it hurts (I mean REALLY hurts)

- Go hyper-focused on one specific audience

- Own that micro-market completely

The goal isn't to be the best - it's to be the only one. When you're the only AI tool specifically for newsletter writers who focus on personal branding, you don't have competition. You have a monopoly.

The Hardware Revolution Thesis

This is the one that keeps me up at night:

While everyone's building software, the real opportunity might be in the physical world. I recently came across something wild - a teddy bear running a local LLM that tells children stories. Sounds like Black Mirror, right?

But this points to something bigger. As AI hardware becomes more accessible, we're about to see intelligence embedded in the most unexpected places.

The question to ask yourself:

- What physical products would be genuinely improved by having a voice?

- What objects could benefit from understanding context?

- Where could local AI processing create magic?

The moat here is massive. Yes, it's harder than building another SaaS tool - but that's exactly why the opportunity is so big.

Speaking of opportunities that most are missing...

AI Accountability

A pattern that became too obvious to ignore during several founder calls:

All these apps using human accountability - fitness coaches, nutritionists, language tutors - are ripe for disruption. But not in the way you might think.

The framework is simple:

- Replace human accountability with AI voice models that actually call you

- Let users choose personalities (strict, funny, wise)

- Focus on areas where immediate feedback matters most

Imagine an AI nutritionist calling you daily about your MyFitnessPal entries. Not just app notifications - but a voice checking in on your meals, suggesting better choices, and keeping you on track toward your goals.

I'm seeing early movers absolutely crushing it in this space.

No-Code Infrastructure

Something massive is happening in the no-code world:

Tools like Cursor and Replit are exploding, but here's what most people miss - there's a whole ecosystem of opportunity around them.

One founder I spoke with built a simple tool that converts app screenshots into prompts for Replit. The result? Nearly 1:1 builds without writing code. That's just scratching the surface.

The step-by-step approach:

- Watch where beginner devs struggle with tools like Cursor/Replit

- Build micro-SaaS solutions to solve those specific pain points

- Focus on making the complex feel simple

The Enhanced Productivity Play

This one's fascinating because it combines trusted frameworks with AI power:

Instead of inventing new productivity methods, smart founders are enhancing proven ones:

- Take frameworks like Atomic Habits or GTD

- Add AI for personalized accountability

- Build in learning capabilities so the AI improves its advice over time

- Focus on maximum output through personalization

The same approach works beyond productivity - think personal finance, fitness, learning... anywhere people already trust established methods.

Voice Interface

Picture this: You're driving, and instead of struggling with a flight booking website, you're just having a conversation with an AI agent who handles everything.

The opportunity here isn't just in converting existing apps to voice - it's in reimagining entire experiences:

- Focus on situations where typing is inconvenient

- Look for tasks people do on the go

- Think about activities where hands-free interaction makes sense

Just like some people prefer audiobooks to reading, a whole segment of users will prefer voice-first software.

The key insight? Different formats for different contexts. Not everyone wants to type or tap all the time.

The Unbundling Movement

Here's a pattern that keeps showing up in my research:

Most AI tools are trying to be everything to everyone. But guess what? The real success stories are doing the exact opposite.

Take Magnific as an example. They didn't try to build an all-in-one AI image suite. They focused on one thing - upscaling - and absolutely dominated.

Here's how successful founders are doing it:

- Find popular AI tools that are bloated with features

- Identify their most-used feature (usually just 1-2 that people actually care about)

- Strip everything else away

- Make it faster and cheaper than the competition

You don't need 100 features. You need the RIGHT feature, executed perfectly.

The Middle-Man Killer

This one's particularly exciting - I recently spoke with a founder who's building something fascinating:

They're using AI to help brands find and reach out to perfectly-matched creators, completely eliminating the need for traditional agencies. Check them out here (no affiliation)

Here's the exact blueprint:

- Find tiresome processes typically handled by agencies

- Look for workflows that can be automated through scraping/mass data analysis

- Build AI agents to replace the middle-man steps

- Sell directly to brands

The opportunity? Anywhere there's a middleman charging premium fees for mainly administrative work.

Builder Education

This isn't exactly a software play, but the demand is absolutely insane right now:

The hunger for AI education, especially around coding, is off the charts.

But here's the key - don't try to teach everything.

The winning approach I'm seeing:

- Focus on becoming an expert in ONE tool (like Cursor)

- Structure everything as challenges ("Build Your First AI App This Weekend")

- Make it pure output-focused ("By Sunday, you'll have a working product")

- Build communities around shared achievements

Remember: People want depth, not breadth. They'd rather complete one real project than watch 100 theory videos.

Knowledge Productization

This last one is a sleeper hit that's about to explode:

Imagine taking a sommelier's lifetime of wine knowledge and turning it into an AI service. Or a master chef's understanding of flavor combinations. That's where this is headed.

The playbook:

- Help experts extract their domain knowledge

- Train specialized models with their expertise

- Create subscription services around this knowledge

- Focus on hyper-specific niches where generic AI falls short

AI pilot reshapes global education

Good morning, AI enthusiasts. A quiet teaching experiment in Nigeria just shattered expectations — turning six weeks of AI tutoring into a two-year educational leap.

Could a powerful AI-driven learning model be the solution needed to solve the global education crisis?

In today's AI rundown:

- AI tutoring shows stunning results

- Apple pulls AI news summaries after false headlines

- Transform your resume with AI feedback

- Microsoft unveils AI model for materials discovery

- 4 new AI tools & 4 job opportunities

AI & EDUCATION

📚 AI tutoring shows stunning results

The Rundown: A new study in Nigeria just revealed that students using AI as an after-school tutor made learning gains equivalent to two years of traditional education in just six weeks — showcasing the power of AI-driven learning in developing regions.

The details:

- The World Bank-backed pilot combined AI tutoring with teacher guidance in an after-school setting, focusing primarily on English language skills.

- Students significantly outperformed their peers in English, AI literacy, and digital skills, with the impact extending to their regular school exams.

- The intervention showed huge improvements, particularly for girls who were behind, suggesting AI tutoring could help close gender gaps in education.

- The program impact also increased with each additional session attended, suggesting longer programs might yield even greater benefits.

Why it matters: This represents one of the first rigorous studies showing major real-world impacts in a developing nation. The key appears to be using AI as a complement to teachers rather than a replacement — and results suggest that AI tutoring could help address the global learning crisis, particularly in regions with teacher shortages.

APPLE

🤖 Apple pulls AI news summaries after false headlines

The Rundown: Apple just temporarily disabled its AI-powered news summary feature in Apple Intelligence after multiple instances of the system generating completely false headlines, including fabricated stories about arrests and deaths that never happened.

The details:

- The feature launched in September with the iPhone 16 and was intended to condense multiple news notifications into brief summaries.

- Major news organizations, including the BBC and the Washington Post, complained that the feature contradicted original reporting and undermined trust.

- The BBC complained about the feature as early as December, urging Apple to remove it due to critical factual errors in breaking news reporting.

- Apple said it plans to make AI-generated summaries more clearly labeled and give users more control over which apps can use the summarization feature.

Why it matters: Apple Intelligence has been underwhelming, to say the least, and letting mistake-prone summaries get pushed out for a month hurts not only the public's trust in journalism but all AI-infused products in general. Apple has a long way to go to bring its AI to the levels of both competitors and what was initially hyped at launch.

AI TRAINING

💼 Transform your resume with AI feedback

The Rundown: NotebookLM provides personalized, detailed feedback on your resume through interactive conversations that highlight your professional achievements.

Step-by-step:

- Head over to NotebookLM and log in or create a free account.

- Upload your resume either in PDF/Word format or directly paste its contents.

- Click "Customize" in Audio Overview to focus on the AI's analysis.

- Select "Join" in interactive mode to ask specific questions.

Pro tip: Keep track of the strongest improvement suggestions for future updates.

MICROSOFT

🔬 Microsoft unveils AI model for materials discovery

The Rundown: Microsoft Research just published MatterGen, an AI model that can generate new materials with specific properties, marking a major shift in how scientists discover and design new materials.

The details:

- The model uses a diffusion architecture that simultaneously generates atom types, coordinates, and crystal structures across the periodic table.

- In tests, MatterGen produced stable materials over 2x more effectively than previous approaches, with structures 10x closer to their optimal energy states.

- A companion system called MatterSim helps validate the generated structures, creating an integrated pipeline for materials discovery.

- The model can be fine-tuned to create materials with specific target properties while considering the design's practical constraints, such as supply chain risks.

Why it matters: The traditional trial-and-error approach to materials discovery is slow and expensive. By directly generating viable candidates with desired properties, MatterGen could dramatically accelerate the development of advanced materials for sectors like clean energy, computing, and other critical technologies.

AGI Will Make You Irrelevant (How To Future-Proof Yourself)

Okay, we need to talk about this.

Last week, OpenAI announced o3—their new model—and tech Twitter went absolutely insane. And if you're unable to make sense of this, it can lead to a lot of fear about the role humans play in the future.

What's so special about o3? Well, it scored with 87.5% accuracy on the ARC-AGI benchmark, which is a significant improvement from previous models.

Without the model being out yet, some people say we've reached AGI and that jobs won't exist within 5 years. Others, Elon included, are saying that money will be meaningless in the future. Unless we collect the right perspective, everything we've known and everything we are doing to better ourselves and our businesses may be for nothing.

The main questions I'm interested in are:

- Will we be considered insects to our AGI overlords?

- If AGI can do everything humans can and more, what the f*** do we do?

- If jobs won't exist, what do we focus on if we want to thrive?

I've accumulated quite a few thoughts on this throughout my years of study. In this letter, I've compiled a few of the most compelling cases for human uniqueness.

I hope I can give you a positive way to make sense of the world and orient your behavior.

Buckle in, my friends. This is the greatest time to be alive.

By the way, much of this is condensed from my next book, Purpose & Profit, launching in February. It will be free (the digital version, at least).

AI vs AGI – A Critical Difference

To understand what AI is and what it means for us, we need to start at the origin of that term. Before AI, there was cybernetics, an idea laid out by Norbert Wiener in 1948.

Cybernetics—ancient Greek for "helmsman" or another word for "governor"—is the idea of automatic, self-regulating control in a system. Acting, sensing, and comparing to a goal is a fundamental loop to intelligent systems. His key insight was that the world should be understood in terms of information. That complex systems like organisms, brains, and societies error-correct toward a goal, and if these feedback loops break down, the system breaks down. Entropy.

Think of a ship approaching a lighthouse at night using constant feedback. The captain sees they're drifting left of the light, steers right, then adjusts again when they've gone too far right.

Two years after Wiener's introduction to cybernetics, he published The Human Use of Human Beings. Now out of print, the central idea relevant to today's world is that "We must cease to kiss the whip that lashes us." Wiener knew the danger was not in machines becoming more like humans but humans being treated like machines.

This is what AI is. A cybernetic system that needs a governor. What we know as AI today, mostly chat apps that we use to find information a tiny bit faster than with a Google search, lacks one crucial trait: agency.

AI is a specialist that needs a generalist. A tool that needs a master. AI is useful for achieving a goal it is assigned, like playing chess or beating Go. As long as AI must be tested—or assigned a goal—to determine its intelligence, it is not even close to human intelligence.

Kortex AI will be going out late January and you'll see what I mean. It can write extremely well if you have a second brain of writing to reference, or you are good at telling it what to do (we solve a lot of this for you). Mobile, desktop, offline, and light mode are also going in throughout January 🙂

But that's the exact problem. Most people have been trained to be specialist tools, not innovative humans in control of their journey in the unknown. It's no wonder they're scared of replacement. They should be. Since the day they were born, they've been assigned goals and have error-corrected to fit the mold their parents wanted them to fit in. Go to school, get a job, retire at 65. Entrepreneurship? Yeah, right. Save your money, get good grades, and listen to authority. Take the safe route. Stay subservient to the dominant system. And before you know it, your mind will scratch and claw its way back to the life that was sold to be comfortable. You may have the occasional desire to change—the depth of your soul crying for you to break free—but the code in your head is so powerful that it quickly squashes that feature mistaken for a bug. Do you see why I talk about this in almost every letter? It may sound like I'm beating a dead horse, but specialist conditioning is arguably the destroyer of humanity because it is the destroyer of creativity. What's the solution? We'll get to that.

Back to the story. Around the 1960s, a new perception of technology emerged that by inventing computers, we had externalized our central nervous system—our minds—and that we all now shared one singular mind. One infinite intelligence. All potential information at our fingertips.

Unfortunately, we don't hear much about cybernetics today.

Why? Because this new perception fueled poor incentives. Norbert Wiener's warnings about intelligent machines ran counter to the aspirations of his colleagues, who were interested in the commercialization of new technologies. They wanted to profit from this. Second, John McCarthy, a computer pioneer, disliked Wiener. He refused to use the term "cybernetics" and instead coined "artificial intelligence," becoming a founding father in that field.

With the meteoric rise in discussion around intelligent machines, we're left wondering what makes humans special or if we were even special to begin with. For being the only species that's made it to the moon, there has to be something there, right?

AGI vs Humans

David Deutsch, influenced by Karl Popper, believes there is something significant about humans, and it lies in our ability to create infinite knowledge.

It starts with the need for creativity. The process by which all knowledge that is created happens through conjecture and criticism. Trial and error. Variation and selection (in Darwinian terms).

In other words, guessing and correcting one's guess is how you accomplish anything you set your mind to. Psycho-cybernetics. This is how we learn, innovate, make progress, and understand almost anything in the universe. The difference is that humans can set their minds on anything, not just a goal it was assigned. It can discover new goals that shape the perception of opportunities, allowing our mind to error-correct toward that goal.

Deutsch believes that humans are "universal explainers." That we are capable of understanding anything that is understandable within the laws of nature.

We create explanatory theories that reveal the deep structure of reality, allowing us to guess and predict in a more efficient way that breeds faster progress with time.

This knowledge allows us to understand things we've never directly experienced, like stars and galaxies. We can understand a rocket even if we've never built one. And if we can understand it, we can eventually build it if we have the knowledge to do so. There is a logical sequence of steps, and each step requires you to have the knowledge for that step. In your practical life, you can't achieve something for the simple reason that you either don't understand it or don't have the knowledge to achieve it. For high-agency humans, this is liberating. For low-agency machines, this is blasphemy.

This is what many get wrong about AGI.

AI, or artificial intelligence, is an incomplete system that must be assigned a goal, like many animals or low-agency employees are programmed to.

AGI, or artificial general intelligence, is a complete or universal system. Like a human who is not limited to a small subset of things that are possible. AGI may have more computational power or memory, but there's no concept that it can understand that we can't ourselves, and that doesn't rule out the fact we can use universal computers or augment ourselves with more computation and memory.

The point is that you can achieve anything within the realm of possibility, but only if you have the knowledge to do so.

You are not doomed to the default path of society or the rule of AGI.

The 5 Human Capabilities – Can AGI Surpass Them?

The thing about AGI is that people can't seem to settle on a definition for it.

Some people see it as a tool that will automate and run their business hands-off. Some think it will be just like us, a person. Some think it will be a superintelligence that completely surpasses human capabilities (they often label this ASI).

The last one is the most frightening because people's minds hop, skip, and jump to conclusions that we may be rendered irrelevant. When we feel stressed, our mind narrows and we can't think straight. We fail to understand.

The questions here are:

- Are human capabilities actually limited?

- Do we not have the capability to learn and do anything that AGI could do?

- Ultimately, are there any limits on what we can think and how we think?

It's fun to speculate about these things, and we can do that all day instead of focusing on what matters, so let's break these capabilities down.

Mainly, we need to pay attention to computation, transformation, variation, selection, and attention as noted by AGI researcher Carlos De la Guardia (also influenced by Deutsch).

Computation

Is there any limit to what we can compute?

No, because once you have a universal computer in your hands, it's just a matter of time and memory to compute anything.

If AGIs have that, they would have as much computational power as us, and therefore no advantage over us. Even further, if we can augment our brains (which I don't see outside of the realm of possibility), we will remain on par with AGI as it accelerates.

Transformation

Transformation is creation. We turn raw materials into rockets given the right knowledge.

Human hands and bodies seem to be especially good at creating anything given a specific sequence of operations. We've built spaceships and telescopes. Meaning that we can build the thing that builds the thing. We are generalists that build tools to thrive in any environment. We are not animals bound to one niche.

The question is:

Is there a limit to what these basic operations can do when strung together in the right way?

Again, the answer is no. If humans could teleoperate a gorilla, there is a sequence of steps it can take to build a rocket given time. And no, I'm not saying a single gorilla. Imagine if Elon were operating the gorilla. What would he do?

The thing here is time. Transformation takes time, and a singularity won't change that just as the Enlightenment or the Big Bang didn't. Time is a compression algorithm that prevents everything from happening at once, and the Enlightenment and Big Bang clearly didn't put rockets in the sky.

So far, the AGI worry seems to stem from a fundamental misunderstanding of reality itself.

How To Create Knowledge

After computation and transformation, there is variation, selection, and attention, which have to do with navigating idea space (or the unknown). We can compute and transform, but do we have limits on the knowledge that allows us to do so?

Knowledge serves two functions.

The first is to make specific things happen, preferably good things rather than bad. The second is to capture patterns in reality. This allows us to store information in an efficient way so that we aren't always starting from scratch in our pursuits. We understand big-picture concepts like the sun rising and falling each day and seasons changing every so often.

Without this understanding, much of our lives would fall apart. Capturing patterns allows us to plan by proximity. We understand that we would freeze to death in a cold environment, so we use deposits of knowledge like a jacket and hotel to keep us warm while we travel.

Think of idea space—or the unknown—as a universal map with light and dark spots. The light spots are areas you've explored. The dark spots are where your potential lies.

This map is a surface area for ideas that can be discovered and tested against reality to verify their validity. When those results do not move you closer toward your goal, or move you further from that, a problem is revealed, and you must error correct toward the goal.

Variation

Is there a limit to the number of new ideas we can come up with to survive and achieve what we set our minds to?

With computation, we can navigate the entire space of ideas. With agency, we can take any step within that space and eventually stumble across a good idea (after many bad ones). With creation, we can move in unique ways, like flying over a forest rather than walking through it.

So, we can understand anything, create anything, and discover an infinite set of new ideas to solve an infinite string of problems. Again, AGI can do the same. We are both bound by the laws of nature, but any possibility inside of that is within reach.

Selection

We can come up with any idea, but can we find the good ones?

The potential problem here is that it is difficult to make cumulative progress without learning from mistakes. It wouldn't be fun to start over from scratch if we wanted to build an electric car after a gas car. We wouldn't be very developed as a species.

As universal cybernetic systems, we can become more efficient at navigating idea space to avoid wandering lost. We error correct. No fundamental difference here either.

Attention

One other aspect that humans take for granted is our ability to change our focus by changing our perspective.

When a problem occurs, where does your attention go? If you want to build a rocket, does it help to ask the old Gods to do it for you? Or can you change lenses to view the situation in a way that allows you to perceive opportunities?

While this is a massive problem for humans—paradigm lock and attaching to ideology—we do have the capability to change where our attention goes when problems come up. We can put on a spiritual lens to find peace and a scientific lens to find progress.

Identifying with a purely ascending and "spiritual" philosophy is no different from being an incomplete system that will fail to solve certain sets of problems. Spirituality is a great lens or tool, but a bad master, and not the end all be all.

Now, since AGI does not seem like it can surpass us in any way unless it bends what is possible (we would have a very different problem on our hands at that point), what do we do?

This doesn't help the fact that life as we know it will change, jobs will be replaced, and the unknown creeps nearer.

In my opinion, the only option is what it has been:

To dive in.

The Answer Has Been & Will Be To Become A Creator

"Be a creator and you won't have to worry about jobs, careers, and AI." – Naval Ravikant

As I've discussed in 80% of my letters that Naval so eloquently put here, the answer is to become a creator. Specifically, a value creator.

When I say this, people assume I mean a myopic little "content creator." Someone who posts content without realizing the depth behind it.

Let's string this together to help it click:

The word "entrepreneur" is of French origin. It comes from the verb "entreprendre," which means "to undertake" or "to do something." In the 16th century when translated to English, it became a noun referring to a person who undertakes a business project.

In other words, an entrepreneur is someone who is doing something. It is not a title or label but an act. It is the commitment to a high-agency life. A commitment to doing things without permission from someone else. To set your own goals and navigate the unknown to achieve them.

And in lies the problem. People want to be told what to do when that's exactly what will get them replaced. That doesn't mean to stop learning from other people, it means to not treat any form of job, skill, or career as an end.

In this sense, a "creator" is someone who is creating something. It's not a title or fancy new kind of job where you can sit in front of a camera and do minimal work. It is a way of being.

It just so happens that the highest leverage place to create—right now, at least—is on the internet. It is the path of high agency. You don't need permission to create something and post it on the internet. You don't need permission to navigate idea space and find the information you need. This may change in the future, but that only reinforces the point. No matter if it's the internet or intergalactic space, the answer has been and always will be to become a creator.

Of course, and as we've learned, that doesn't mean it's easy. That doesn't mean you can skip trial and error. That doesn't mean that one course will give you all the answers because that's not how the mind works. That's not how time works. That's not how AGI will work when we're exposed to an entirely new set of problems once ones like jobs are solved.

You can go through something like 2 Hour Writer or the One-Person Business Launchpad, and that helps give you direction to take the first few steps into the unknown, but most people quit because they fail to realize that you still need to error-correct.

That's what creators do. They solve the infinite set of problems that life presents. Without problems, there is no creativity. Without problems, there is no purpose. Pain and suffering stem from the inability to understand problems and, more so, relinquishing your ability to solve them. A world without creativity and purpose is a world without life.

The mark of a sovereign individual is that they learn how to learn:

- They have an evolving vision for the future

- They build a meaningful project as one stepping stone

- They identify problems that prevent progress

- They generate ideas and test solutions

- They become more efficient with time

- They deposit their creation and knowledge

- If valuable, they are rewarded by the monetary system of that society

- If not valuable, they error correct until valuable

- And lastly, they never, ever quit because someone else's vision trumps their own.

Do it all. Write. Design. Market. Sell. Film. Code. Be the generalist you were born to be. Be the orchestrator of ideas. The governor of thought. AI is simply a tool that now allows you to learn and do all of these. Once it becomes your master, you lose.

Nobody can tell you what to do in the future. But has it ever been any different? We're just talking in circles at this point. You're looking for a quick fix as always and you know that the longest path is the quickest fix. The principles haven't changed, you just haven't taken the leap.

Thank you for reading.

I hope it helped.

– Dan

Everything you need to transform your newsletters into podcasts

Lettercast started as a fun side project I built for myself. After seeing how many people were interested in using it, I quickly added profiles and authentication to make it available for everyone.

To cover the running costs, there's a €5 monthly subscription. Feel free to use it yourself! Happy to keep adding features based on your feedback.

Why Choose Lettercast?

Save Time

Get the essence of all your newsletters in just 10 minutes

Zero Inbox Clutter

Say goodbye to newsletter overwhelm

Ultra-Convenient

Listen on WhatsApp – no new apps needed

Perfectly Personal

Each episode is crafted specifically for your interests